· Read in 5 minutes · 980 words · All posts in series ·

[dropcap]Sources[/dropcap] of inspiration is one thing we do not lack in machine learning. This is what, for me at least, makes machine learning research such a rewarding and exciting area to work in. We gain inspiration from our traditional neighbours in statistics, signal processing and control engineering, information theory and statistical physics. But our fortune continues, and we can take further inspiration from biological and evolutionary systems and, of importance to this series, the cognitive sciences of sociology, psychology and neuroscience.

I previously explored important inspirations for machine learning offered by neuroscience and unpacked the role of prediction, sparsity, modularity and complementary learning in building learning systems. But there are other skills expected of learning systems that are better understood at a higher-level, using the perspective of cognitive science. Four topics that I hope to explore during this series and that I think are amongst the most important to explore are:

- Causal reasoning. How can we build machine learning systems that learn from cause and effect and detect causal relationships. Humans have an impressive ability in this regard, and we shall explore aspects of causal induction, counterfactual inference and causal learning in cognitive systems. In machine learning we can connect this to reasoning over influence graphs and inference in directed acyclic graphs.

- Agents as scientists. As humans, we continuously explore and learn from the world around us. We form hypotheses, test them, and learn from them. Equipping machine learning with this investigative powers will lead us to examine abductive inference, active learning, Bayesian optimisation, and bandits.

- Cognitive semantics. How is is that humans are able to learn about meaning and build knowledge of concepts, objects and relationships. We can connect our understanding from cognitive science to that of statistical relational AI, the wider theory of relational learning, block-models and community discovery.

- Forming a theory of mind. Humans exist in societies from which they also learn and obtain knowledge from. Our cognitive tools include attribution theory, intentional agents and theory-theory. We can connect this to the wide thinking in economics, game theory and multi-agent systems.

Descriptive Framework for this Series

To better describe cognitive concepts and their relation to machine learning, I'll split the discussion into two parts. The first part will deal with cognitive science and offer the following description:

- Cognitive observation. We'll first make an observation of one of the many cognitive task that humans so easily demonstrate, and look at the evidence using experiments and observations from childhood development to experienced adults.

- Cognitive inspiration. We will distill our cognitive evidence to form a cognitive principle that will then serve as the inspiration for machine learning systems.

The second part will deal with machine learning, where I'll use the framework of model-inference-algorithm—one I have used for every essay in this blog:

- Probabilistic model. We specify a probabilistic model with the properties, structural assumptions an prior information that we believe best describes the problem and the outcomes we'd like to see.

- Inferential principle. The choice of model, as well as the types of assumptions, approximations, computational and accuracy constraints that we must achieve influences the choice of inferential principle that we use to connect data we observe to the model we have specified.

- Algorithm. Any choice of model and inference can be implemented in many different ways, and this is captured within an algorithm that describes the specific architectural components, types of computations used, and how computing platforms are exploited.

Existing Cognitive Frameworks

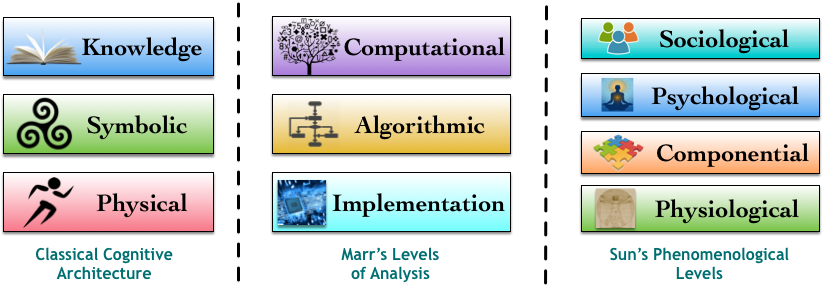

The descriptive framework I will use is simplified to make the connection between cognitive science and machine learning easier to describe. But cognitive science is rich with conceptual frameworks that are highly helpful in structuring an understanding of diverse cognitive phenomena. There are three frameworks that always provide inspiration:

1. Classical Cognitive Architecture

Newell and Simon (1976) [cite key=newell1976computer] unpacked the complexity of cognitive processes through a three-stage hierarchy, often referred to as the classical architecture. Their levels are:

- Knowledge. Explains the actions of agents by inferring their goals and analysis of the knowledge-bases needed to achieve them.

- Symbolic. An agent's knowledge and goals are encoded into symbolic structures that can be connected in different ways and manipulated to achieve a goal.

- Physical. Symbolic structures and their manipulations are put into physical form.

2. Marr's Levels of Analysis

Marr's levels [cite key=marr1982vision] have been highly influential and is one I found particularly useful in exploring the connections between neuroscience and machine learning. Marr's levels are very similar to Newell and Simon's and include:

- Computational. Deals with the high level task that needs to be achieved by an agent.

- Algorithmic. Specifies how a computational problem can be solved.

- Implementational. An identified solution must be implemented within a brain.

3. Sun's Phenomenological Levels

This framework [cite key=sun2005levels] aims to focus on cognitive phenomena rather than a framework, and encourages us to think holistically about the relationship between an agent and its environment (both physical and sociological). We can reason using a hierarchy of four phenomena:

- Sociological. The collective behaviour agents is important and this level identifies an explains inter-agent and socio-cultural processes.

- Psychological. A focus on individual agent behaviours and the beliefs, knowledge, concepts and skills (motivation, emotion, perception) they possess.

- Componential. Cognitive function is realised through the composition of several components. We can specify a cognitive architecture (e.g., ACT-R, CLARION, NEF), computational paradigms (e.g., symbolic, connectionist, Bayesian) and can consider potential biological constraints.

- Physiological. The realisation of any identified components in a biological substrate.

There are other frameworks as well, including the abstract cognitive architecture and rational analysis that are also commonly used.

Final Thoughts

Cognitive science is a marketplace at which we can connect machine learning and statistics to neuroscience and even philosophy. There is much on offer, and I hope over the course of this series, to distill many of the ideas that can serve as a powerful source of inspiration for the machine learning systems of the future.

[bibsource file=http://www.shakirm.com/blog-bib/cogML/prologue.bib]

4 thoughts on “Cognitive Machine Learning: Prologue”