Keynote at the Stanford Human-centered AI 2023 Fall Conference on New Horizons in Generative AI: Science, Creativity, and Society. 📹 Video online.

Hello, hello, hello! Thank you so much for having me here today. Believe it or not, it’s actually my first time here at Stanford. So I’m really, really excited to be here this morning. You are a community that I don’t often get to connect with; you play a key role in our AI ecosystem, and so to have this stage today is a real honour, and I want to express my deep thanks to Surya and Percy and Kaci, and all the other organisers of today’s conference for giving me this gift of experiencing something for the first time; and of course thanks to all of you in this audience, online or whenever you might be seeing this.

It is the experience of firsts that I thought we could pick up on, and use as a theme for the next 20 mins.

We do already have a theme for today on new horizons in Generative AI. And I thought we could dig into it by looking at what the actions at the horizon are. That’s why I’m putting forward this title for today on the Responsibilities of the Pioneer.

The title is also designed as a resemblance to the essay by Noam Chomsky entitled the responsibility of intellectuals, and I thought that could be a nice side connection for us.

Doing things for the first time - saying, writing, coding, reviewing, organising, deploying, leading - so experiencing things anew is something that is special in our lives, and in our experiences as people. So as we kick of this morning, I would like you to now think of your own firsts - maybe, like me today, the first time you found yourself here on this campus.

But I don’t want an unhealthy image of being first in a race to publish or deploy to come to mind, nor should your thoughts be about winning or getting the top prize or being first in a league table. Rather, I want our imaginations of firsts to be directed to things that are new and previously unknown, and the wonder and awe of those events and experiences.

Our conference today on new horizons in generative AI, invites us to think of the frontier of research and innovation. And right now, especially in this area of generative AI, we are either experiencing newness and firsts ourselves, or are actively seeking them out. In these experiences, I am right there with you, and I’ll use my time to connect deeply with this research area, which has been a key part of my work for this last decade, and also to share some of my view of this horizon: in AI for weather and climate, AI in drama, and AI with social purpose. And the rest of today’s programme, and the amazing speakers and audience assembled here will no doubt reveal other aspects of that horizon.

But we are not merely passive observers of the new horizons in generative AI: we are those people defining what the frontier and those new horizons will turn out to be. And it is for this reason, and for the time of this talk, why I’m identifying us as pioneers, and as pioneering. And if we are pioneers, I think that gives us some foresight, some power, and ultimately, some responsibilities. To explore this, I’m going to use two stories to brainstorm aloud with you what some of the responsibilities of the pioneer might be; and I hope you will add more of your thoughts on this in the discussion.

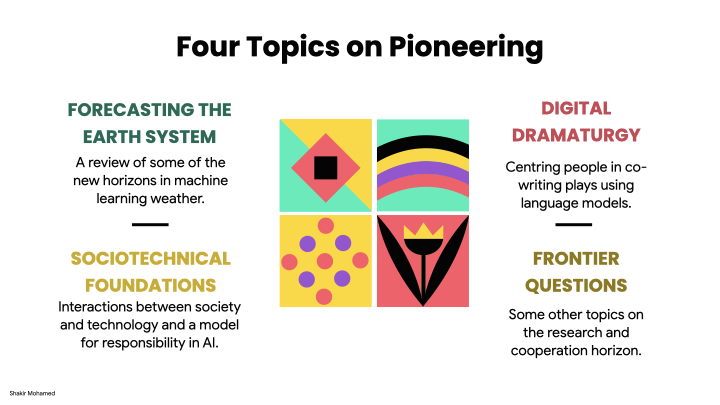

So two stories: one about Forecasting the Earth System, and a second story about Digital Dramaturgy. These stories will expose some features of the sociotechnical foundations of generative AI that is my underlying message and call to action.

So onto

Story 1: Forecasting the Earth System

The year is now 1922, and Lewis Fry Richardson has just published his book Weather Prediction by Numerical Process. What he doesn’t know yet, is that this book will become a cornerstone of the field and industry of weather forecasting. The centuries before had given Richardson, and also us today, one of the best exemplars of a triple-impact domain, so one where research impact, deployment and product impact, and social impact are mutually realised by the search for improved weather forecasts and climate understanding.

As Richardson finalises his book, he adds his vision of a new horizon for weather forecasting, writing about his vision of a "Forecast Factory”. This factory is a building with an enormous central chamber with walls painted to form a map of the globe. A large number of computers, human computers, are busy calculating the future weather. Richardson estimated that 64,000 people would be needed to complete the calculations needed to forecast the changing weather. Of course, a factory was the model of how Victorian era industrialists thought about the world, not unlike the data centre and digital assistant models we might use today. Nevertheless, Richardson’s broad-strokes vision has been realised.

And Triple-impacts are abound. For example, consider that the 3 day ahead predictions of hurricane tracks we have available today, are more accurate than the 1 day ahead forecasts we had 40 years ago. So this technical field has increased both accuracy and the prediction horizon of its forecasts, while enabling all the safety and social benefits that forewarning brings.

But the frontier of weather forecasting and climate understanding is always changing, and full of firsts. A major part of the reinvigoration in weather and climate we have today is from the significant progress we are making as a field in AI for earth systems forecasting. In my view, this is one of the most vibrant and rewarding areas for any of us to be working in right now.

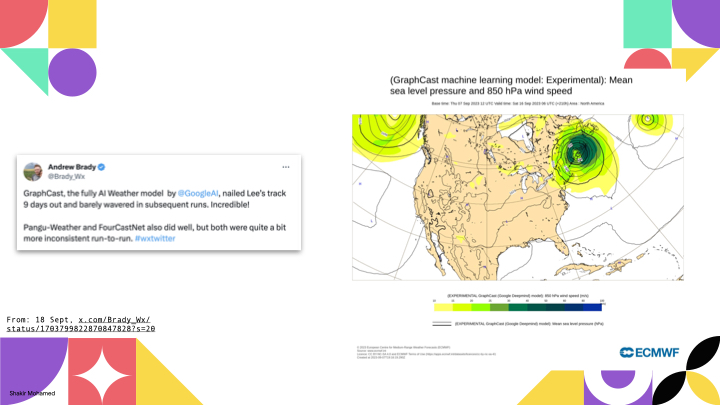

Some of the new horizons for weather forecasting came into view a few weeks ago with the formation of a storm system that was later named Hurricane Lee. The ECMWF, widely recognised as the world's leading centre for medium-range forecasting has now made available several of a new generation of machine learning-based approaches for 10 day ahead weather forecasts. We refer to 10 day ahead predictions as medium-range forecasts. The availability of these forecast models allowed people who work on hurricanes and extremes to monitor and record the performance of these new machine learning predictions, and specifically predictions for wind and sea level pressure, which are key variables for analysing these storm systems. It’s still too early to say anything concrete about machine learning for hurricane forecasting, but maybe this social media post says enough for now, in particular highlighting the strong performance of a model we refer to as GraphCast that I want to tell you a bit more about.

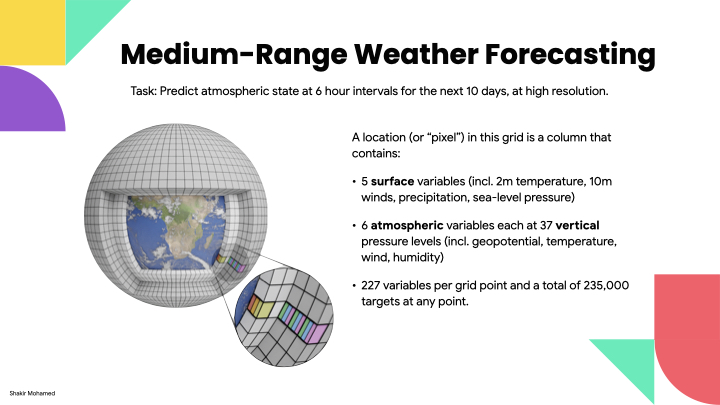

For medium range forecasting, the prediction problem involves making predictions in 6 hour intervals from 6 hrs to 10 days ahead, at around 25 km spatial resolution at the equator. In the data we used, each “pixel” in this grid on the earth contains 5 surface variables, along with 6 atmospheric variables each at 37 vertical pressure levels, for a total of 227 variables per grid point. So that means 235k variables for each time point we want to make a prediction for.

One magic ingredient here is the commitment of international meteorological organisations and their member states to making numerical simulations available for us to use as training data, specifically a type of data known as reanalysis data that covers the last 40 years. The aim here is to build the best general-use base weather model we can. This base model can later be built upon by other users, like environment agencies or commercial operators, for their specific problems. And these problems are many: renewable energy, logistics, aviation, floods, safety planning and warnings, and so many more.

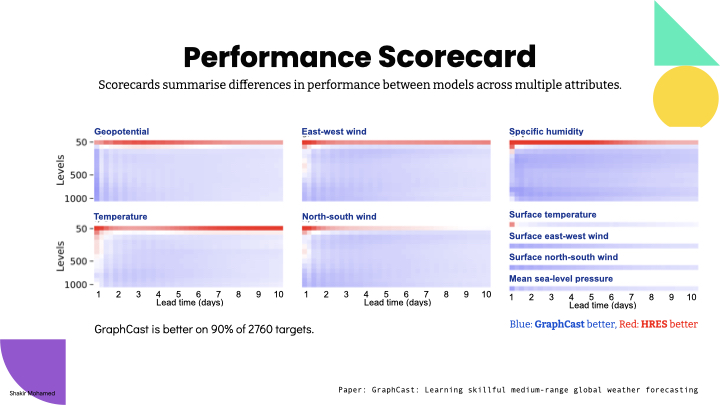

To show how this works, it is common to produce a scorecard, which is what you are seeing on the screen, that visually summarises model performance across different variables, and using different metrics; blue squares when the model is better than the operational system, and red when it’s not. Importantly, they also show performance across different subsets of the data, like performance for the southern hemisphere vs the northern hemisphere, or other important subsets of the data. This scorecard shows that machine learning approaches can outperform operational weather systems. And so with this scorecard, we have affirmatively answered that long-standing question of whether data-driven machine learning approaches for weather forecasting can be competitive with world-leading operational forecasting systems.

Using graph neural networks, we are able to show state-of-the-art performance that significantly outperforms the most accurate operational deterministic global medium-range forecasting system on 90% of the 1380 verification targets we assessed. This model also outperforms other machine learning-based approaches on 99% of the verification targets that are reported. Our model can generate a forecast in 60 seconds on a single deep learning chip, which we estimate is 1-2 orders of magnitude faster than traditional numerical weather prediction methods. And if I had more time, we could talk more about how base models for weather like graphcast can be used for forecasting severe events like cyclones, atmospheric rivers, extreme heat and cold, and others.

Now, I don’t want you to leave with the impression that we now have a replacement for widely-used numerical weather prediction methods, or the role of physical knowledge and simulation. Rather, what’s clear from the results of so many different contributors and machine learning groups across the world working in this area, maybe some of you in this room, is that rapid advances are being made in the use of machine learning for weather. We are seeing this in improvements compared to operational forecasts and across forecast horizons, and we hope this is part of opening up new ways of supporting the vital work of weather-dependent decision-making that is so important to the flourishing of our societies.

As a quick plug, if you might have work in this general area, do consider submitting it to the ongoing JMLR special collection on Machine Learning addressing problems of Climate Change.

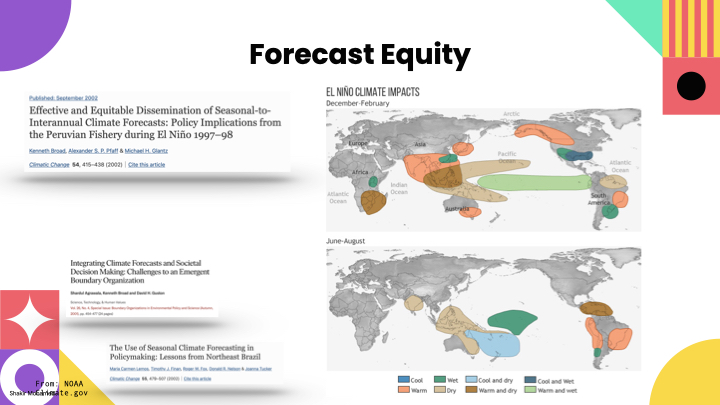

Yet, while so many technical advances continue to be made, another less uplifting story is also unfolding. We are again back in time, now in 1997 and in Peru. Amazingly, improved forecasts of El Nino are now available, and with that a new tool to assess shipping conditions and potential changes in ocean fish populations. This is where our story takes a turn: fishing companies, incentivised by the prospect of a weak season based on such forecasts, chose instead to accelerate layoffs of their workers. So no triple-impact here: these improved forecasts have led to demonstrable harms and the opposite of their assumed benefit.

If I give you a quick second example, today, the average forecast accuracy for high-income countries is more than 25% higher than the average accuracy in low-income countries. Making the problem of forecast equity a distinct challenge for us.

So now we can come to the role of the pioneers, who see and make new horizons. Clearly what I‘m suggesting, is that part of the responsibility of the pioneer is to direct attention to areas with broad societal benefit, like weather and climate. But we should not simply assume that new models of AI and weather will have a positive impact and be used for outcomes that lead to prosperity. In his essay, Chomsky wrote something very relevant for us, that

“it will be quite unfortunate, and highly dangerous, if they [new advances] are not accepted and judged on their merits and according to their actual, not pretended, accomplishments.”

N. Chomsky in Responsibility of Intellectuals

Creating the culture for this form of accountability is then one of our responsibilities.

Taken together, the responsibility of the pioneer lies in placing AI’s technical advances in its broader context, of history, variability in prediction quality, limitations in who and how societies access technology, its diverse use cases, and our changing climate. How we discharge this responsibility is a new horizon of its own.

Let’s leave the weather behind for a bit, and move on to a second story.

Story 2: Digital Dramaturgy

It’s 2022 and a series at the Edmonton Fringe is running called Plays By Bots. The description for the improv series was: “Bots write scripts. Performers act them out. Then they improvise the ending. Hilarity ensues. The result is an unpredictable work of human-machine co-creativity. You will laugh until your face hurts”.

The role of generative AI and language models in creative writing fields is of course very active today. I thought we could look at the part on AI for dramaturgy, which is the writing of dramas, scripts and plays.

One system that you can play with is called Dramatron, which is a compositional use of text generating models set up for dramaturgy. Dramatron builds a structural context for a play or drama via prompt chaining (at least for now), and aims to generate coherent scripts and screenplays complete with title, characters, story beats, location descriptions, and dialogue. This is a depiction of the hierarchical generation that starts with a log-line that sets the scene for this co-writing exercise, and then allows chaining, regeneration and editing to provide a co-writing experience.

But Dramatron was actually designed to explore a more fundamental question: of what artists might actually want from co-writing tools, and how artists might participate in its design.

Later on in a small conference room, a group of these artists came together to write a script using this systems, and situate their practice, understanding of the technology, and their views of their art and industry. Some of the artists would later even stage their co-written scripts. This group of people revealed a breadth of needs and insights that Dramatron’s designers alone could not have concluded. Some artists saw new modes of interactivity and opportunity, and others exposed its limitations and concerns, or concluded that it was simply not a tool for them. Here is what some of the participants said:

- “In terms of the interactive co-authorship process, I think it is great [...] ”

- “You know, with a bit of editing, I could take that to Netflix: just need to finesse it a little bit”.

- “Actually lots of the content [...] is misogynistic and patriarchal”

- “AI will never write Casablanca, or A Wonderful Life. It might be able to write genre boxed storytelling”.

Dramatron is an ideation and a co-writing tool, and I think a good example of taking a people-centred approach to AI, since its success is only possible if people actually find value in it.

The real story here is about how we can centre the needs of different groups of people, and different communities, in the development of new technical systems, whether that is in establishing better weather forecasts like I spoke about earlier (which I could tell you another story about), or in creating performance scripts, or in any other areas of application you might have in mind. This way of ensuring that people are also owners and shapers of the upcoming horizons is often referred to as participation. Participatory AI is an active area of work, one where there is a great deal of complexity and tension, but an area from which all our work can benefit.

Today, screenwriters in some regions have worked through their expectations of working with AI creativity tools, and have made clear their demands and constraints and how they want to work with new technologies, now and going forward. The truly sociotechnical nature of creative AI is fully on display here. And if ever you are confronted with the narrative of technological determinism that surrounds AI, then this story is one to bring up, since it makes clear that technology’s outcomes are never determined or inevitable, and that people and groups together do have agency in actively deciding the role and shape of technologies in their lives.

What there is in any maturing field, and generative AI is not an exception, is uncertainty: about the path of future technology, its impacts, and its benefits. That uncertainty directly affects how we go about designing and releasing new methods. This is often well captured by the famous Collingridge dilemma on this tension between technological uncertainty and control, with the famous quote that: "When change is easy, the need for it cannot be foreseen; when the need for change is apparent, change has become expensive, difficult and time consuming."

What I hoped to expose with the story of Dramatron’s participatory nature is that we need not make a choice between uncertainty and premature action, or certainty and expensive action. Participation leads us down a different path that includes people in the design of our methods, allows us to be comfortable with disagreement, and being open to changing what we work on, and how we work, based on wider input. Participation changes the nature of how we research, design, evaluate, and deploy ML systems, making it people-centred, and turns it into an ongoing process. Participation done well can place our work on stronger ethical foundations by incorporating and accounting for the values of the societies we operate in. There is much newness and many firsts for us in this area, and ones worth embracing.

Taken together, the responsibility of the pioneer is to centre people, to rebuke deterministic thinking, and to work with uncertainty; and in so doing leave open a multiplicity of future outcomes and new horizons that are possible with AI.

Let me try and bring these two stories a bit closer. So onto

Sociotechnical Fondations

New approaches for weather forecasting and new ways of thinking of co-writing are just two of the many new horizons in science and creativity and its roles across societies. Of course these are two wonderful fields through which we can explore the responsibilities of the pioneer. In both climate and creativity, a defining characteristic of the new horizons that I’ve really been trying to point out is their fundamentally sociotechnical nature. And the starting point for the sociotechnical foundations for generative AI is an understanding that the social and the technical are always interacting, and mutually reinforcing.

To expound and discharge our responsibilities, strong sociotechnical foundations are needed, where we ask at every stage how people and society and technology are mutually interacting. Sociotechnical AI is all about adjusting and adapting the conceptual apertures we use as we go about our work: asking our technical and engineering work to account for a wider and more expansive set of considerations; while also bringing focus and manageability to the seeming vastness of social considerations. Sociotechnical approaches ask for an ecosystem view, and is a way of engaging with that ecosystem. And with this, I’m not only pointing out a recognition of sociotechnical thinking, I am also pointing out a very specific approach to responsibility and responsible AI, and a firm foundation for it.

Let me invoke another line from Chomsky’s essay to add one further responsibility:

“If it is the responsibility of the intellectual to insist upon the truth, it is also [their] duty to see events in their historical perspective.”

The responsibility of the pioneer is to create a path dependency from the past, to the present, and for the future. That is a power to direct, create complex couplings, and see different kinds of outcomes. Outcomes that consider agency, uncertainty and control, science and quality and access, and community-centred participation.

So Let me get to the end with a few

Frontier Questions

The frontier in weather and climate is one that has been reshaped across centuries, from initially divining weather from the stars, to the forecast factory, to maybe the forecast assistant or forecast agent of tomorrow. Today, the exciting approaches for weather forecasting are possible because, globally, countries and organisations have set up a system of open sharing of forecast data. That means that we who have new skills and ideas and curiosities are able to bring our intellectual energy, build on what has come before, and add new methods, benchmarks and technologies. Of course there is a vast horizon of what still remains for weather and climate and AI, amongst them:

- Continued innovation in environmental data sharing and use for public benefit.

- Better precipitation forecasts, which are a key priority since most models are weak here.

- Seasonal and decadal forecasting that extends forecasts to 3-12 months have been poor historically, yet there are many needs for such forecasts.

- Predictions for renewables, digital twins, and net-zero attainment.

- And Causal methods for detection and attribution of significant events. Amongst other problems.

Creativity is just as complex a landscape where technical tools for co-writing are confronted by the needs of people, artists, and the fundamentally human work of enriching our cultures and memory. Creativity provides a perfect environment for sociotechnical approaches that are people-centred, and opens up the landscape for us to ask much more complex questions about how our models are used, should be used, and the processes we use to make new innovations. Further questions include:

- Establishing further participatory and community-centred work to showcase and enable their effective use.

- More work on human-AI interactions, behavioural studies and evaluations.

- More historical and decolonial work that continues to provide public memory of what has come before, and that foregrounds rigour and humility.

- And perhaps for each of you, if you are a technical researcher, to dedicate one of your next papers to taking on a sociotechnical research question.

I’ll end here and thank you again for this wonderful opportunity today.

Wrap Up

If i’ve achieved my aims, then you got to this point with three key messages:

- Firstly, the horizon for weather and climate is exciting, and changing and full of firsts and new opportunities. AI for Earth systems is a technically challenging area, one where generative models are proven as a useful approach, creates many collaboration opportunities, and touches upon the entire sociotechnical system. You should get involved.

- There is a specific and firm model for responsibility that is built on taking a sociotechnical approach to generative AI and our work. This will centre the role of people at all stages, advance participatory methods, create more demanding evaluations, and more expansive views of safety and benefit.

- Finally, the responsibilities of the pioneer are ours, to take on and magnify. This involves engaging with historical context and path dependency, navigating sociotechnical uncertainty, making our contributions to the advancement of science, and directing machine learning towards social-purpose.

The gift of a first is a special one, and I thank each of you, deeply, for allowing me to openly brainstorm and publicly hope with you in this way. My sincere gratitude and thanks.

As a short postscript, here are some resources that might be of interest:

- Noam Chomsky’s New York Review of Books essay on the Responsibility of Intellectuals.

- A Paper on co-creativity and artists engagement, Co-Writing Screenplays and Theatre Scripts with Language Models: Evaluation by Industry Professionals.

- The Ada Lovelace Institute's evidence review on Foundation Models in the Public Sector.

- And the lastest machine learning based forecasts deployed by the ECMWF, which includes the graphcast model I referred to, at charts.ecmwf.int

And a quick pointer to two papers I’ve been lucky to work on with many amazing collaborators:

- Work on 10 day weather predictions, GraphCast: Learning skillful medium-range global weather forecasting.

- And a paper on participation, Power to the People? Opportunities and Challenges for Participatory AI.

Again my deep thanks to you all.