Chinese Edition: A Statistical View of Deep Learning (III)/ 从统计学角度来看深度学习

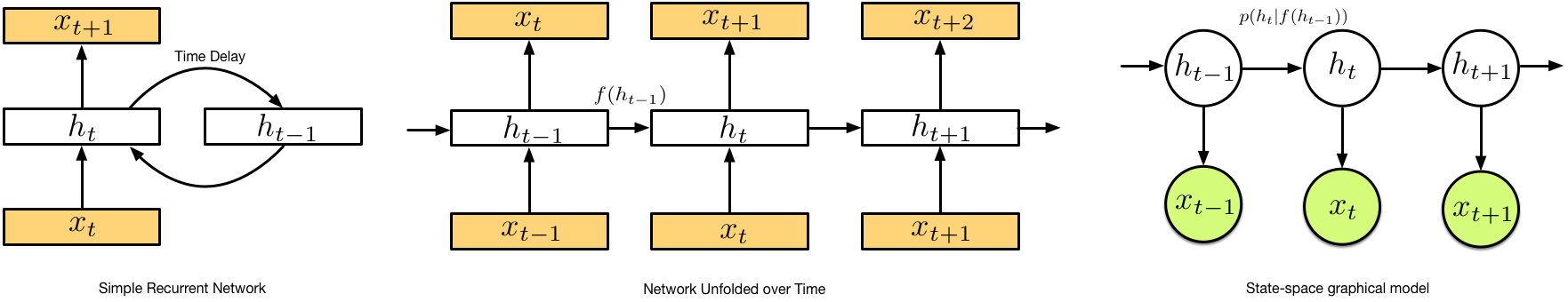

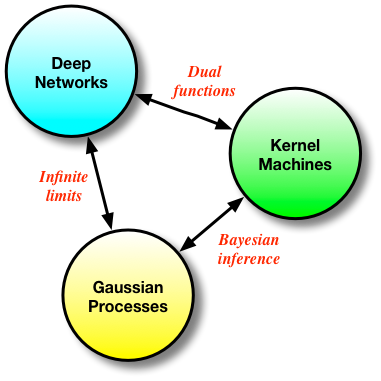

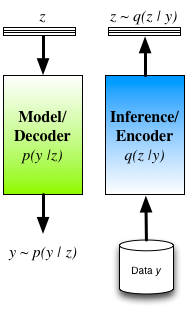

Colleagues from the Capital of Statistics, an online statistics community in China, have been kind enough to translate my third post in this series, A Statistical View of Deep Learning (III): Memory and Kernels, in the hope that they might be of interest to machine learning and statistics researchers in China (and to Chinese readers). Find it here: 从统计学角度来看深度学习(3):记忆和核方法 Continue reading Chinese Edition: A Statistical View of Deep Learning (III)/ 从统计学角度来看深度学习